Four lessons from a year building tools for machine learning

Raza Habib

Four lessons from a year building tools for machine learning

I want to share with you some of the most surprising lessons about how to build tooling for machine learning, what is needed going forwards and why domain experts will play a much larger role in the future of AI.

Over the last year Humanloop has been building a new kind of tool for training and deploying natural language processing (NLP) models. We’ve helped teams of lawyers, customer service workers, marketers and software developers rapidly train AI models that can understand language, then use them immediately. We started focused on using active learning to reduce the need for annotated data but quickly learned that much more was needed.

What we really needed was a new set of tools and workflows designed from first principles for the challenges of working with AI. Here is some of what we learned.

1. Subject-matter experts have as much impact as data scientists

In early 2011 the demand for deep learning expertise was so high that Geoff Hinton could auction himself to Google for 44 million dollars. Today that’s no longer true.

Much of what was hard in 2011 has been commoditized. You can use state of the art models by importing libraries and most research breakthroughs are quickly incorporated. Even after a PhD in deep learning, I continue to be surprised by how well standard models can perform out-of-the-box on a wide range of use cases.

Building machine learning services is still hard but much of the challenge is getting the right data.

Perhaps, surprisingly, technical ML knowledge is becoming less useful than domain expertise.

For example, we worked with a team who wanted to know the outcome of over 80,000 historic legal judgments. Manually processing these documents was totally infeasible and would have cost hundreds of thousands of dollars in lawyer time. To solve this task, a data scientist alone wasn’t really helpful. What we really needed was a lawyer.

The traditional data-science workflow sees data annotation as just a first step before model training. We realized that placing data annotation/curation at the centre of the workflow actually gets you to results much faster. It lets subject-matter experts take the lead and collaborate more easily with data scientists. We’ve seen that it produces higher quality data and higher quality models.

A team of two lawyers annotated data in the Humanloop platform and a model was automatically trained in parallel using active learning. In just a few hours the lawyers had trained a model that provided the outcome of all 80,000 judgments without needing the input of a data scientist at all.

It’s not just lawyers though. We’ve seen teams of doctors annotating to train medical chat bots; financial analysts labeling for named entity recognition and scientists annotating data so that papers can be searched at scale.

2. The first iteration is always on the labeling taxonomy

Training a machine learning model usually starts with labeling a dataset. When we first built the Humanloop platform we thought choosing a labeling taxonomy was something you did at the start of a project and were done.

Most teams underestimate how hard it is to define a good labeling taxonomy without exploring the data

What we quickly realized was that as soon as teams start annotating data, they find that initial guess at what categories they wanted is wrong. Commonly there are classes in the data they never thought about, or that are so rare they’re best combined into a broader category. Teams are often also surprised by how hard it is to get consensus about what even simple classes mean.

There is almost always a discussion between data scientists, project managers and annotators on how to update the labeling taxonomy after the project starts.

Having data curation at the centre of the ML workflow makes it much easier to get the different stakeholders to consensus quickly. To make this easier, we added the ability for project managers to edit their labeling taxonomy during annotation. The Humanloop model and active learning system automatically respect any changes to labeling. We made it possible for teams to flag, comment and discuss example data points.

3. The ROI on fast feedback is huge

One of the surprising benefits of the active learning platform we built is that it actually allows for rapid prototyping and de-risking of projects. In the Humanloop platform as a team annotates, a model is trained in real time and provides statistics on model performance.

A lot of machine learning projects fail. According to Algorithmia it can be as high as 80% of projects that never make it into production. Often this is because goals are unclear, the input data is too poor quality to predict the output or models get stuck waiting to be productionized. Senior management becomes reluctant to dedicate resources to projects that have high uncertainty and so many good opportunities are missed.

Although we didn’t plan for it, we realized that teams were using Humanloop's fast early feedback to assess the feasibility of their projects. By uploading a small dataset, and labeling a handful of examples they could get a sense of how well their project might work. This meant some projects that would have probably failed didn’t go ahead and others received more resources quickly because teams knew that they would work. Often this early exploration was being done by product managers with no machine learning background at all.

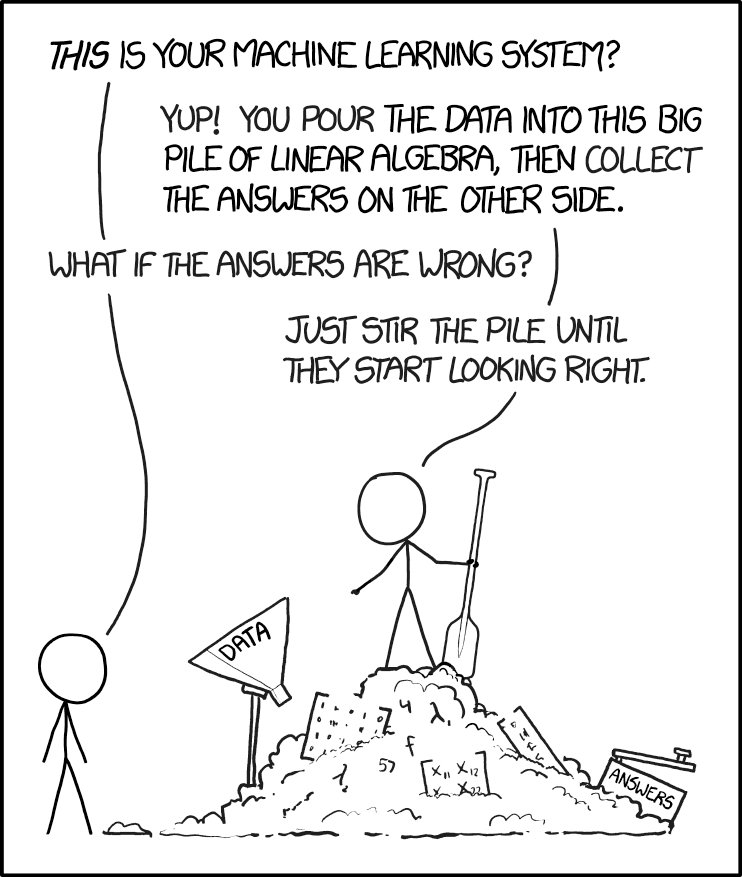

4. ML tools should be data-centric but model-backed

Most existing tools for training and deploying machine learning (MLOps) were built as if for traditional software. They focus on the code rather than the data and they target narrow slices of the ML development pipeline. There are MLOps tools for monitoring, for feature stores, for model versioning, for dataset versioning, for model training, for evaluation stores and more. Almost none of these tools make it easy to actually look at and understand the data that the systems are learning from.

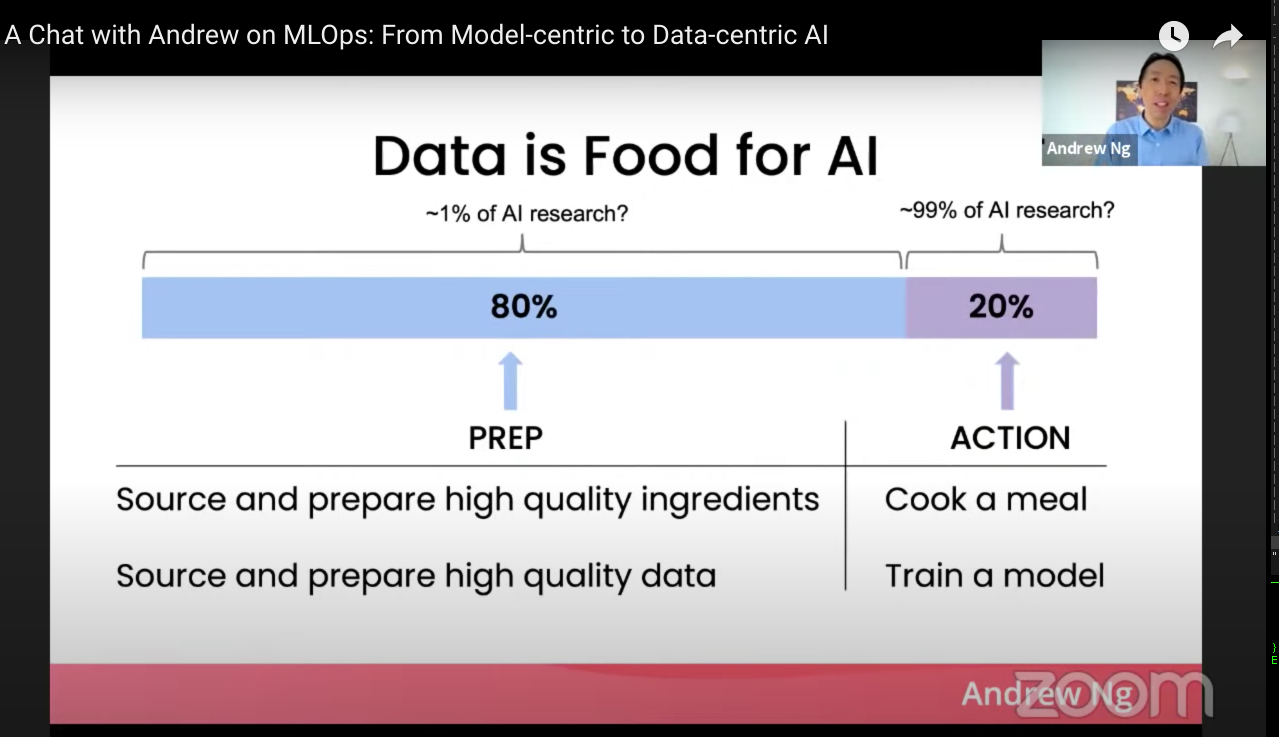

Recently there’s been a call from people like Andrew Ng and Andreij Kaparthy to have ML tools that are data centric. We totally agree that machine learning requires teams to be much more focused on their data sets but what we’ve learned is that the best version of these tools needs to be closely coupled with the model.

Most of the benefits we’ve seen from Humanloop platform has come from the interplay between the data and the model:

- At exploration: the model surfaces rare classes and provides feedback on how hard categories are to learn.

- During training: the model finds the highest value data to label so that it’s possible to get to high performing models with fewer labels.

- At review: the model makes it much easier to find mis-annotations. The Humanloop platform surfaces examples where the model’s prediction disagrees with the domain expert labelers with high confidence. Finding and correcting mislabeled data points can often be the most effective way to improve model performance.

At each stage of the ML development process there are benefits from combining the data and model building process. By having a model learn as you annotate, deployment is no longer a "waterfall" moment. Models learn continuously and can be easily kept up to date.

Over the past year we think we’ve made significant steps in building the new tools needed to make machine learning much easier, starting with NLP. We’ve now seen domain experts in numerous industries contribute to the training of AI models and are excited to see what new applications will be built on top of Humanloop.

If you have an idea for what you would build if your software could read, we’d love to show you how Humanloop works.

About the author